在下面的 pdf 中,Total memory requirements (train time) 那段有提到

Memory usage and computational considerations

同時在這篇文章也有提到

Memory in neural networks is required to store input data, weight parameters and activations as an input propagates through the network.

In training, activations from a forward pass must be retained until they can be used to calculate the error gradients in the backwards pass.

As an example, the 50-layer ResNet network has ~26 million weight parameters and computes ~16 million activations in the forward pass. If you use a 32-bit floating-point value to store each weight and activation this would give a total storage requirement of 168 MB.

By using a lower precision value to store these weights and activations we could halve or even quarter this storage requirement. [2]

在這個問題 Why do convolutional neural networks use so much memory? [3] 中,也提到了

NN 的模型大小

也可以參考史丹佛大學的 CS231n Winter 2016 Lecture 11 ConvNets in practice 上課影片

訓練過程中的記憶體使用

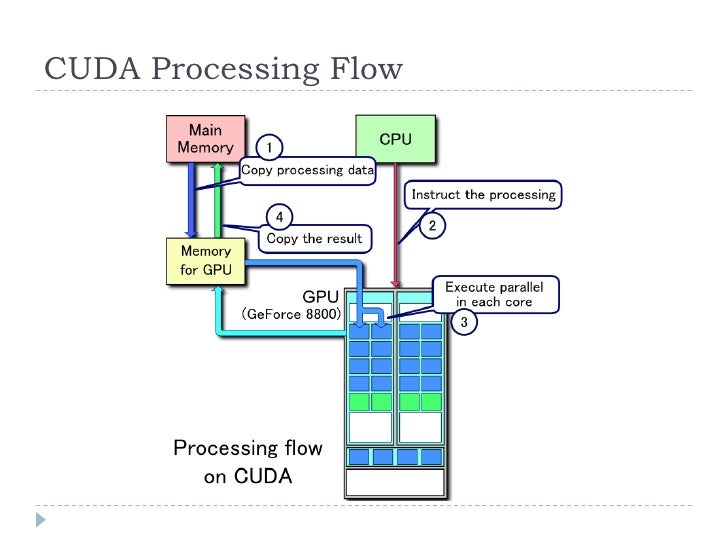

讀取數據,會先從硬碟(Hard Disk)中讀出影像資訊存放到隨機存取記憶體(RAM)

再將暫存的影像資料從 RAM 搬到 GPU 內部的記憶體中

這些資料可能就高達數個GB之多,下面的文字有針對這件事情的描述:

A greater memory challenge arises from GPUs' reliance on data being laid out as dense vectors so they can fill very wide single instruction multiple data (SIMD) compute engines, which they use to achieve high compute density.

CPUs use similar wide vector units to deliver high-performance arithmetic. In GPUs the vector paths are typically 1024 bits wide, so GPUs using 32-bit floating-point data typically parallelise the training data up into a mini-batch of 32 samples, to create 1024-bit-wide data vectors.

This mini-batch approach to synthesizing vector parallelism multiplies the number of activations by a factor of 32, growing the local storage requirement to over 2 GB. [2]

至於運算下一個epoch的時候,則再從RAM裡面搬資料到GPU的記憶體中

另外,如果batch size調大的話,影像資料就會佔用較大的記憶體空間

Ex. 原本batch size = 32, 改成 64 的話,在執行迴圈中的每一輪訓練過程,佔用的記憶體的增長速度就會變成兩倍

也可以參考此篇 A Full Hardware Guide to Deep Learning 的文章

從 Asynchronous mini-batch allocation 到 Hard drive/SSD 的部份即可

[1] Memory usage and computational considerations

[2] Why is so much memory needed for deep neural networks?

沒有留言:

張貼留言